Introduction

These docs are open draft space of chief operator officer and not necessarily uptodate deployed setup in production. We are in process of moving docs into website/blog format so treat info here as raw as hackmd posts.

Expect low level mumbling about internet technologies as well as hardware spcecs of infra we have built ourselves part by part in place to meet the unconvetional specs for server world that hosting web3 succesfully requires. Most important components for blockchain perfomance are memory and especially storage for merkle trie state as well as high clock speed CPU.

Some rule of a thumb when looking components for validator infra, you want CPU capable to function at 5GHz(singlethread performance in cpubenhcmark +3k), at least pcie4.0 nvme with constant performance of 1800MB/s(usually marketed with temporary cahce performance 7000MB/s). Ideally collators/blockbuilder should be run with pcie5/pcie6 and executed with multiple cores/validators.

Random Access Memory, better known RAM, should be functioning at 4800MHz, meaning you can only use single stick per CPU because motherboards controller is not able to deliver enough voltage for more. For example running all 4 slots in AM5 motherboards limits performance of RAM to only 3600MHz instead of functioning at full 4800-6000MHz.

Polkadot new relaychain JAM specs are going to be 16 core 5GHz SMT disabled CPU, 8 TB of NVMe, 64GB DDR5 and networking at ~500Mbits having global routing table without too over subscribed routes since networking is not anymore gossip based but direct authenticated point to point.

Hardware Infrastructure

Our primary goal is to deliver a high-performance and secure blockchain infrastructure that fosters trust and reliability. We aim to achieve this by focusing on the critical elements of blockchain technology - namely, ensuring high single-thread performance for validator services, and low latency for RPC services, among others.

Network Architecture

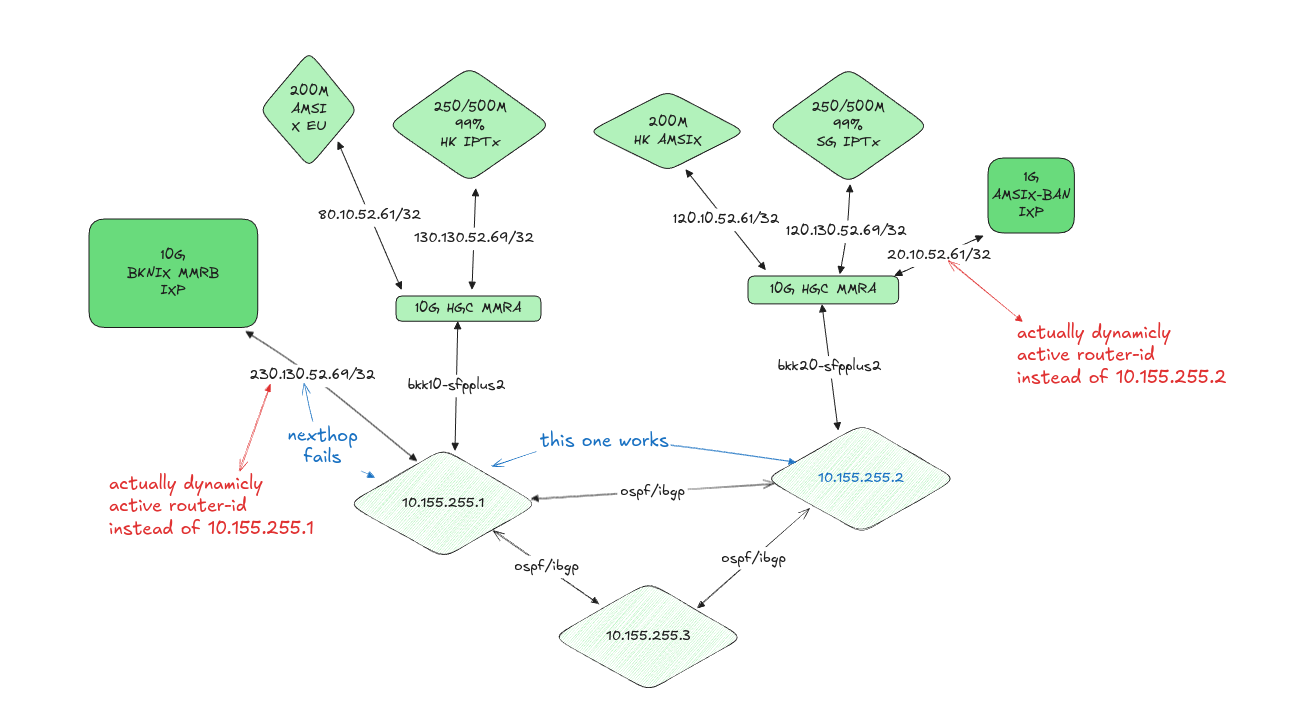

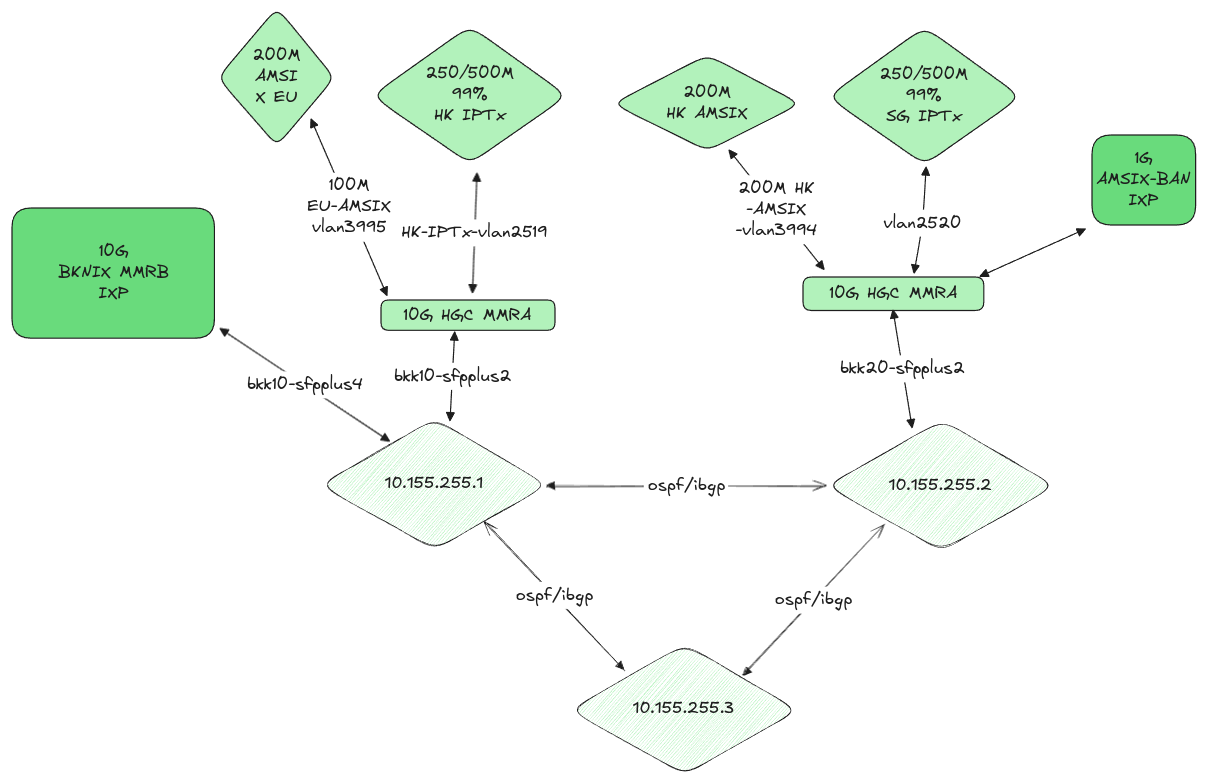

For reference, our network topology includes the following components:

Upstream Providers

- BKNIX, AMS-IX (Bangkok, Hong Kong, Europe)

- HGC IPTX (Hong Kong, Singapore)

- Backup circuits: HGC IPTX TH-HK and TH-SG

Edge Routers

- BKK00 (MikroTik CRR2216)

- BKK20 (MikroTik CRR2216)

Core Routers

- BKK10 (MikroTik CRR2116) - VRRP Primary

- BKK50 (MikroTik CCR2004) - VRRP Fallback

Storage Infrastructure

- High-speed switches: BKK30, BKK40 (100G)

- Storage servers: BKK06 (EPYC 7713), BKK07 (EPYC 9654), BKK08 (EPYC 7742)

- HA Quorum Network for redundancy

Validator Nodes

- BKK01, BKK02 (Ryzen 5600G)

- BKK03 (Ryzen 7950X3D)

- BKK04 (Ryzen 9950X)

- BKK09 (Ryzen 7945HX)

- BKK11, BKK13 (Ryzen 5950X)

- BKK12 (Ryzen 7950X)

Management

- BKK60 (MikroTik CRS354) for IPMI access to all devices

Performance Considerations

Validator services in blockchain infrastructures demand high single-thread performance due to the nature of their operations. Validators, in essence, validate transactions and blocks within the blockchain. They act as the arbitrators of the system, ensuring the veracity and accuracy of the information being added to the blockchain. This is an intensive process that involves complex computations and encryption, thus requiring a high-performance, single-thread system to maintain efficiency.

The low latency required for our RPC services is another vital factor in our hardware design. RPC, or Remote Procedure Call, is a protocol that allows a computer program to execute a procedure in another address space, usually on another network, without the programmer needing to explicitly code for this functionality. In simpler terms, it's a way for systems to talk to each other. Low latency in these operations is crucial to ensure a smooth and seamless dialogue between various systems within the blockchain. A delay or a lag in these communications can cause bottlenecks, leading to a slowdown in overall operations.

Hardware Specifications

The hardware components and their configurations we have selected are specifically designed to address these needs. By leveraging advanced technologies like the AMD Ryzen 9 7950X for its superior single-thread performance, DDR5 memory for fast data retrieval, and NVMe SSDs for their exceptional speed in data storage and retrieval, we aim to provide an infrastructure that can effectively handle the demands of blockchain technology.

Key Components

| Node Type | Processor | Memory | Storage | Purpose |

|---|---|---|---|---|

| Validator | AMD Ryzen 7950X/5950X | DDR5 | NVMe SSDs | Transaction validation |

| Storage | AMD EPYC 7713/9654/7742 | High-capacity | Redundant arrays | Blockchain data storage |

| Edge | MikroTik CRR2216 | - | - | Network edge routing |

| Core | MikroTik CRR2116/CCR2004 | - | - | VRRP redundant core routing |

Scalability and Resilience

Our infrastructure is also designed to ensure scalability and flexibility. As the demands of the blockchain ecosystem grow, so too should our capacity to handle these increasing demands. Hence, our hardware design also incorporates elements that will allow us to easily scale up our operations when necessary.

The network features full redundancy with:

- Cross-connected edge routers with multiple upstream providers

- Geographic diversity with connections to Hong Kong, Singapore, Bangkok, and Europe

- Backup IPTX circuits for fallback connectivity

- VRRP router redundancy for core routing

- High-speed 100G storage networking

- HA Quorum Network for storage resilience

In essence, our hardware is purpose-built to deliver high-performance blockchain operations that are secure, reliable, and capable of scaling with the demands of the evolving blockchain ecosystem.

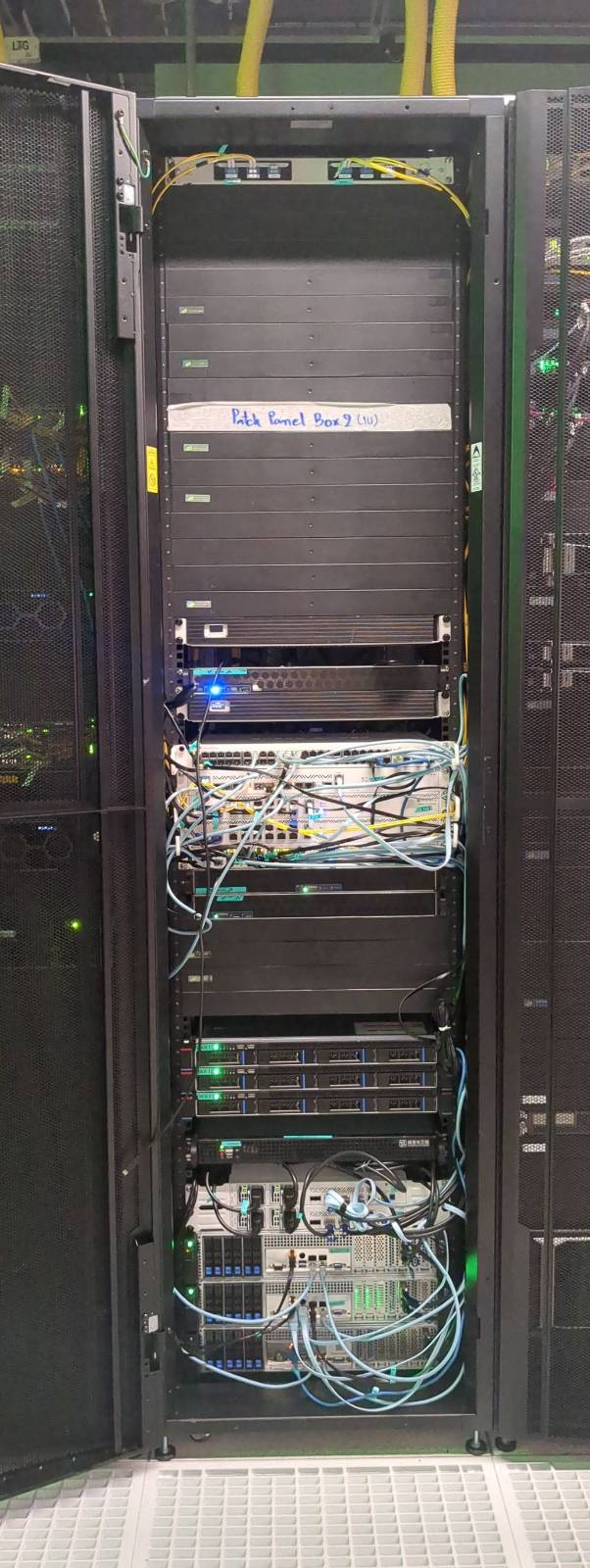

Rack

At the heart of our operations is a meticulously designed server infrastructure, securely housed within ten units of a top-tier 48U rack. Providing approximately 230 liters of computational capacity, our setup is powered by a robust dual 2kW, 220V power system that underlines our commitment to delivering superior performance and steadfast availability.

Our server infrastructure is hosted within a carrier-neutral facility, strategically enabling seamless and robust connections with a broad range of service providers, ISPs, and cloud platforms. This network versatility fosters enhanced performance and unyielding reliability, thus ensuring a consistently superior user experience.

More than a mere assembly of servers, our setup is a comprehensively designed ecosystem meticulously architected to achieve maximum efficiency. Leveraging location flexibility, our infrastructure can be configured across multiple strategic points to guarantee optimal network connectivity and minimized latency.

Direct peering arrangements with major local and international internet exchanges ensure broad bandwidth and unwavering connectivity. Coupled with floor and inter-floor cross-connect cabling, we have fostered a well-connected network capable of facilitating smooth data transfer between servers and racks.

Our infrastructure is further enhanced with a suite of cutting-edge networking devices, including industry-leading routers and switches. Services such as KVM over IP for remote server management, alongside on-site technical support and smart hands as a service, amplify our operational efficiency.

To guarantee optimal performance and longevity of our hardware, a tightly regulated environment is maintained. Our facility features controlled air temperature and humidity, ensuring the hardware operates within optimal conditions. Additionally, we have installed a UPS and backup power generators to mitigate the risk of power interruptions.

Security is paramount. Our facility, with ISO 27001 certification, employs a rigorous system of access control with logging and video surveillance, ensuring a safe and secure environment for our infrastructure. Additional safety measures such as fire alarms and smoke protection systems are in place to protect our hardware. A dedicated network operations center, operational 24/7, stands ready to promptly address any technical concerns.

Our setup also incorporates a raised floor design, an element that demonstrates our meticulous attention to detail. This design improves air distribution and cable management, leading to thermal efficiency and a well-organized operational environment.

Networking Hardware Overview

Edge Routers

- CRR2216 (bkk10): Edge router for Bangkok site.

- CRR2116 (bkk20): Edge router for Bangkok site.

Core Routers

- CRR2004 (bkk50): Core router for Bangkok site.

High Availability (HA) Switches

- CSR504 (bkk30): HA switch for Bangkok site.

- CSR504 (bkk40): HA switch for Bangkok site.

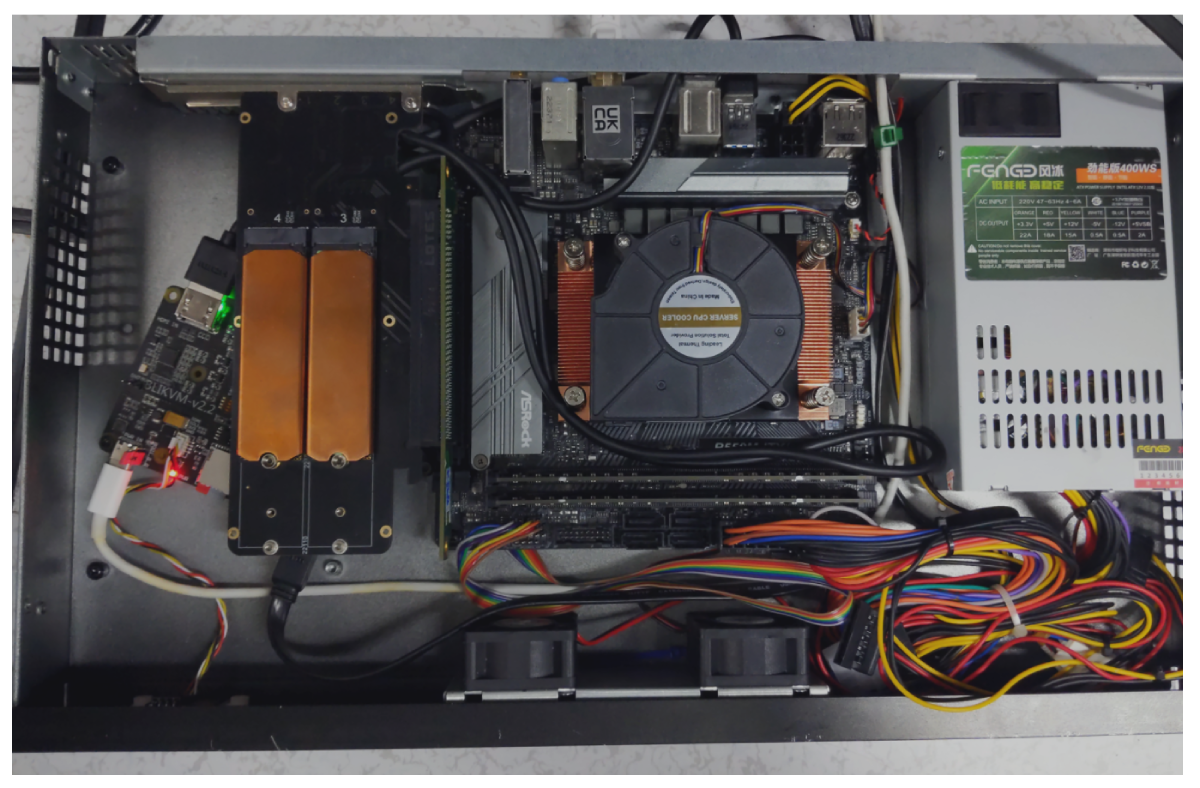

BKK01 - Validator

CPU

AMD RYZEN 5 5600G 6-Core 3.7 GHz (4.6 GHz Max Boost) Socket AM4 65W

The heart of our operations, the 6-core AMD RYZEN 5 5600G, offers excellent performance for blockchain applications. It provides robust and reliable service even under demanding workloads.

CPU Cooler

For managing the thermal performance of our CPU, we use the COOLSERVER P32 CPU Cooler. It's equipped with high-quality heatpipes and can handle the Ryzen 5 5600G even under intensive workloads.

RAM

Our setup uses 2 modules of 32GB DDR4 RAM from Hynix, providing us with ample bandwidth and ensuring smooth server operations.

Motherboard

The MSI A550M-ITX/ac motherboard is an engineering marvel that brings together the performance of the consumer world with the dependability of server-grade hardware. This motherboard supports the AMD Ryzen series CPUs and DDR4 memory, promising speed, reliability, and scalability.

Storage

4x 2TB NVME Monster Storage 3D TLC SSD - R:7400Mb/s W:6,600MB/s

For storage, we use 4 Monster Storage 3D TLC NVMe SSDs, each of 2TB capacity. These high-speed SSDs are known for their exceptional performance and efficiency in data storage and retrieval.

Power unit

This second-hand case comes with an integrated 400W Power Supply Unit. The PSU is essential for providing power to your internal components. It converts the power from the wall outlet into a usable form for your computer's components. Despite being second-hand, the PSU is in good condition and will provide a reliable power source for your system.

Chassis

This second-hand case comes with an integrated 400W Power Supply Unit. The PSU is essential for providing power to your internal components. It converts the power from the wall outlet into a usable form for your computer's components. Despite being second-hand, the PSU is in good condition and will provide a reliable power source for your system.

KVM

BliKVM v1 CM4 "KVM over IP" Raspberry Pi CM4 HDMI CSI PiKVM v3

A modern, highly secure, and programmable KVM solution running on Arch Linux, which provides exceptional control over your server, akin to physical access. With an easy build process, it boasts minimal video latency (about 100 ms) and a lightweight Web UI accessible from any browser. It emulates mass storage drives and allows ATX power management, secure data transmission with SSL, and local Raspberry Pi health monitoring. You can also manage GPIO and USB relays via its web interface. The PiKVM OS is production-ready, supports a read-only filesystem to prevent memory card damage, offers extensible authorization methods, and enables automation with macros.

Features of PiKVM:

- Fully-featured and modern IP-KVM: PiKVM is up-to-date with the latest KVM technologies.

- Easy to build: PiKVM offers ready-to-use OS images and a friendly build environment.

- Low video latency: With approximately 100 milliseconds of video latency, it provides one of the smallest delays of all existing solutions.

- Lightweight Web UI and VNC: The user interface is accessible through any browser, with no proprietary clients required. VNC is also supported.

- Mass Storage Drive Emulation: On Raspberry Pi 4 and ZeroW, PiKVM can emulate a virtual CD-ROM or Flash Drive. A live image can be uploaded to boot the attached server.

- ATX power management: PiKVM supports simple circuits for controlling the power button of the attached server.

- Security: PiKVM is designed with strong security, using SSL to protect traffic.

- Local monitoring: PiKVM monitors the health of the Raspberry Pi board and provides warnings for potential issues.

- GPIO management: Control GPIO and USB relays via the web interface.

- Production-ready: PiKVM OS is based on Arch Linux ARM and can be customized for any needs.

- Read-only filesystem: The OS runs in read-only mode to prevent damage to the memory card due to a sudden power outage.

- Extensible authorization methods: PiKVM supports integration into existing authentication infrastructure.

- Macro scripts: Repetitive actions can be automated with keyboard & mouse action macros.

- Open & free: PiKVM is open-source software, released under the GPLv3.

BKK02 - Validator 2

CPU

AMD RYZEN 5 5600G 6-Core 3.7 GHz (4.6 GHz Max Boost) Socket AM4 65W

The heart of our operations, the 6-core AMD RYZEN 5 5600G, offers excellent performance for blockchain applications. It provides robust and reliable service even under demanding workloads.

CPU Cooler

For managing the thermal performance of our CPU, we use the COOLSERVER P32 CPU Cooler. It's equipped with high-quality heatpipes and can handle the Ryzen 5 5600G even under intensive workloads.

RAM

Our setup uses 2 modules of 32GB DDR4 RAM from Hynix, providing us with ample bandwidth and ensuring smooth server operations.

Motherboard

The MSI A520M-ITX/ac motherboard is an engineering marvel that brings together the performance of the consumer world with the dependability of server-grade hardware. This motherboard supports the AMD Ryzen series CPUs and DDR4 memory, promising speed, reliability, and scalability.

Storage

4x 2TB NVME Monster Storage 3D TLC SSD - R:7400Mb/s W:6,600MB/s

For storage, we use 4 Monster Storage 3D TLC NVMe SSDs, each of 2TB capacity. These high-speed SSDs are known for their exceptional performance and efficiency in data storage and retrieval. Downside is that lacks DRAM for caching.

Power unit

This second-hand case comes with an integrated 400W Power Supply Unit. The PSU is essential for providing power to your internal components. It converts the power from the wall outlet into a usable form for your computer's components. Despite being second-hand, the PSU is in good condition and will provide a reliable power source for your system.

Chassis

This second-hand case comes with an integrated 400W Power Supply Unit. The PSU is essential for providing power to your internal components. It converts the power from the wall outlet into a usable form for your computer's components. Despite being second-hand, the PSU is in good condition and will provide a reliable power source for your system.

KVM

BliKVM v1 CM4 "KVM over IP" Raspberry Pi CM4 HDMI CSI PiKVM v3

A modern, highly secure, and programmable KVM solution running on Arch Linux, which provides exceptional control over your server, akin to physical access. With an easy build process, it boasts minimal video latency (about 100 ms) and a lightweight Web UI accessible from any browser. It emulates mass storage drives and allows ATX power management, secure data transmission with SSL, and local Raspberry Pi health monitoring. You can also manage GPIO and USB relays via its web interface. The PiKVM OS is production-ready, supports a read-only filesystem to prevent memory card damage, offers extensible authorization methods, and enables automation with macros.

Features of PiKVM:

- Fully-featured and modern IP-KVM: PiKVM is up-to-date with the latest KVM technologies.

- Easy to build: PiKVM offers ready-to-use OS images and a friendly build environment.

- Low video latency: With approximately 100 milliseconds of video latency, it provides one of the smallest delays of all existing solutions.

- Lightweight Web UI and VNC: The user interface is accessible through any browser, with no proprietary clients required. VNC is also supported.

- Mass Storage Drive Emulation: On Raspberry Pi 4 and ZeroW, PiKVM can emulate a virtual CD-ROM or Flash Drive. A live image can be uploaded to boot the attached server.

- ATX power management: PiKVM supports simple circuits for controlling the power button of the attached server.

- Security: PiKVM is designed with strong security, using SSL to protect traffic.

- Local monitoring: PiKVM monitors the health of the Raspberry Pi board and provides warnings for potential issues.

- GPIO management: Control GPIO and USB relays via the web interface.

- Production-ready: PiKVM OS is based on Arch Linux ARM and can be customized for any needs.

- Read-only filesystem: The OS runs in read-only mode to prevent damage to the memory card due to a sudden power outage.

- Extensible authorization methods: PiKVM supports integration into existing authentication infrastructure.

- Macro scripts: Repetitive actions can be automated with keyboard & mouse action macros.

- Open & free: PiKVM is open-source software, released under the GPLv3.

BKK03 - Bootnode/RPC

CPU: AMD Ryzen™ 9 7950X3D 16-Core 32-Thread 5NM

Product Link

The AMD Ryzen 9 7950X3D, with its 16-core 32-thread architecture, is the driving force behind our server's high performance. The CPU's multi-core design and high clock speeds are specifically optimized for blockchain applications, ensuring efficient chain synchronization and reliable endpoint service.

Featuring advanced technologies like PCI Express® 5.0 and DDR5, the CPU provides rapid data transfer, essential for low-latency blockchain transactions. The large 128MB L3 cache further bolsters performance by facilitating quick access to frequently used data, enhancing efficiency.

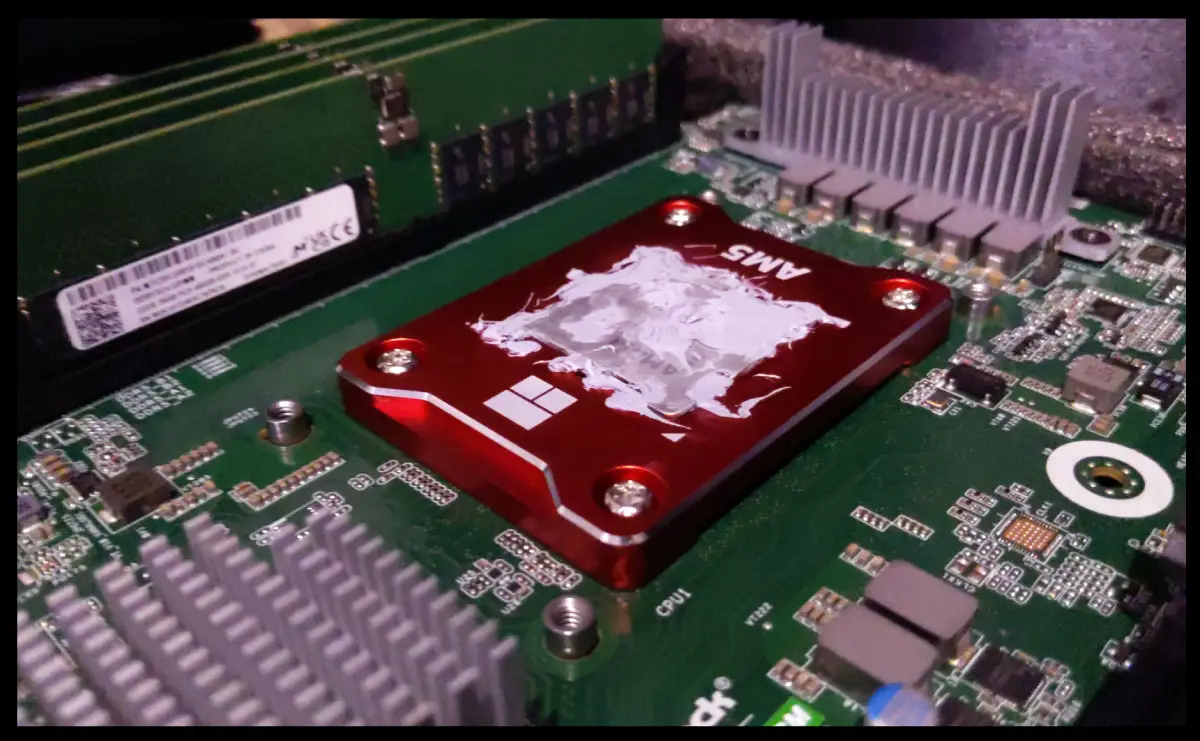

CPU Cooling System

COOLSERVER P32 CPU Cooler

Thermalright aluminium alloy AM5 frame

Cooling efficiency is paramount in maintaining stable performance. Our server utilizes the COOLSERVER P32 AM5 Server CPU Cooler, in conjunction with the Thermalright AM5 frame, to maximize cooling capabilities.

Motherboard: AsRock Rack B650D4U-2L2T/BCM(LGA 1718) Dual 10G LAN

Product Link

This Micro-ATX motherboard stands as a testament to AsRock's engineering prowess, blending high-performance consumer technology with the robustness of server-grade hardware. The board offers full PCIe 5.0 support and features up to 7 M.2 slots for NVMe storage, enhancing data transfer speeds. Its compatibility with DDR5 ECC UDIMM memory further underlines its suitability for demanding server applications.

Memory: 4x 32GB MICRON DDR5 UDIMM/ECC 4800MHz

Product Link

Our selection of server-grade DDR5 memory modules provides substantial bandwidth for smooth server operations. Equipped with ECC technology, these modules maintain data integrity, ensuring the reliability of our transactions.

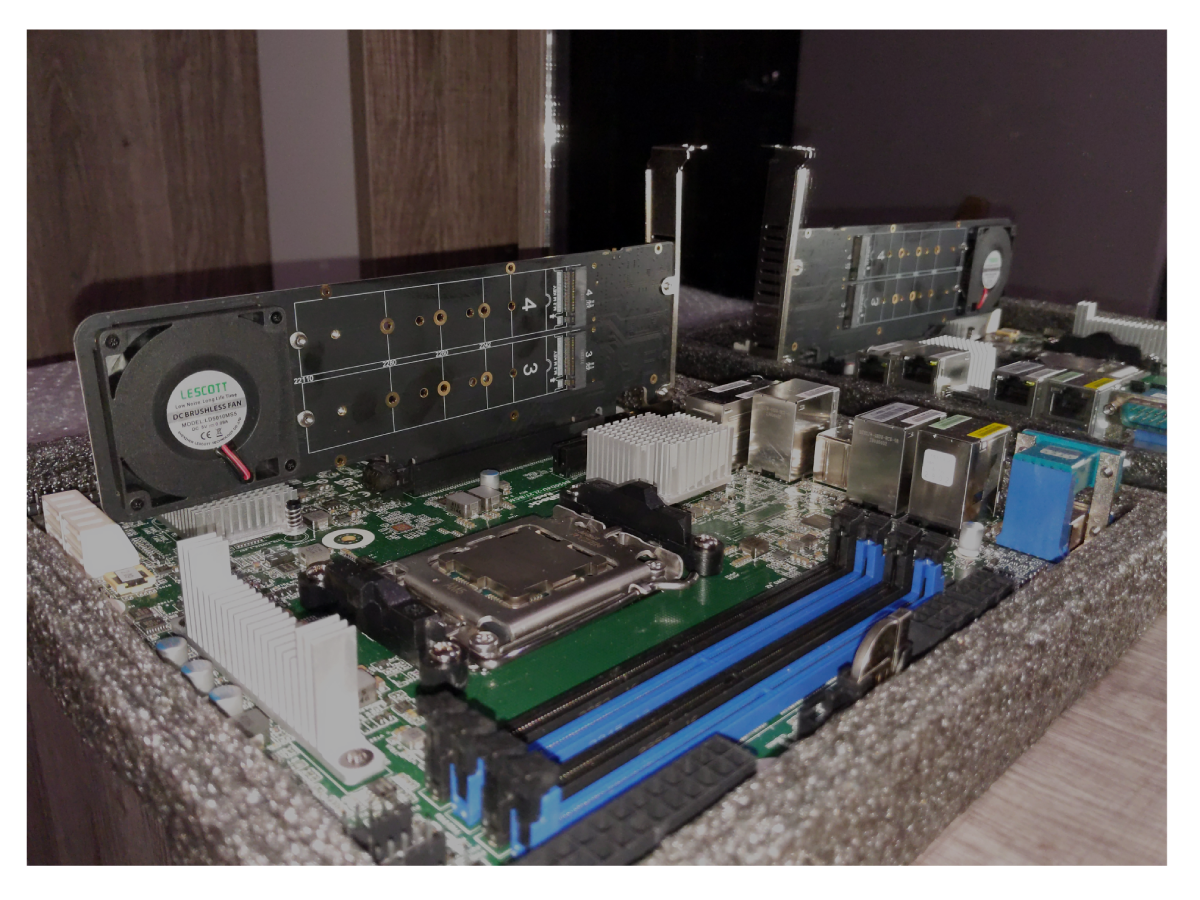

SSD Expansion: NVMe PCIe RAID Adapter 4 Ports NVME SSD to PCI-E 4.0 X16

This expansion card plays a vital role in our data management strategy by enabling the integration of top-tier NVMe SSDs. It contributes to our server's responsiveness by facilitating faster access to stored data.

Storage: 5x 2TB Hanye ME70 NVMe PCI-E4.0 7200mb/s

Product Link

Our system's storage is equipped with 2TB High-Performance ME70 M.2 NVMe SSDs, providing 12TB of high-speed storage. The SSDs' Gen4 PCIe tech and LDPC error correction ensure quick data access and integrity.

Benchmarks

2023-07-31 13:55:08 Running machine benchmarks...

2023-07-31 13:55:34

+----------+----------------+-------------+-------------+-------------------+

| Category | Function | Score | Minimum | Result |

+===========================================================================+

| CPU | BLAKE2-256 | 1.56 GiBs | 783.27 MiBs | ✅ Pass (203.8 %) |

|----------+----------------+-------------+-------------+-------------------|

| CPU | SR25519-Verify | 788.10 KiBs | 560.67 KiBs | ✅ Pass (140.6 %) |

|----------+----------------+-------------+-------------+-------------------|

| Memory | Copy | 27.73 GiBs | 11.49 GiBs | ✅ Pass (241.3 %) |

|----------+----------------+-------------+-------------+-------------------|

| Disk | Seq Write | 2.99 GiBs | 950.00 MiBs | ✅ Pass (322.0 %) |

|----------+----------------+-------------+-------------+-------------------|

| Disk | Rnd Write | 1.29 GiBs | 420.00 MiBs | ✅ Pass (313.4 %) |

+----------+----------------+-------------+-------------+-------------------+

From 5 benchmarks in total, 5 passed and 0 failed (10% fault tolerance).

2023-07-31 13:55:34 The hardware meets the requirements

Read Latency Statistics in nanoseconds:

-------------------------

Minimum: 520 ns

Maximum: 22540 ns

Mean: 914.595734 ns

Standard Deviation: 222.087316 ns

Read IOPS: 953140.861971

Chassis: 1U Case, TGC H1-400

Product Link

Power Supply Unit: 400W Compuware 80 PLUS Platinum PSU

Vendor Link

KVM: Asrock Rack BCM/IPMI

The AsRock Rack motherboard includes a BCM for remote control, offering robust management capabilities.

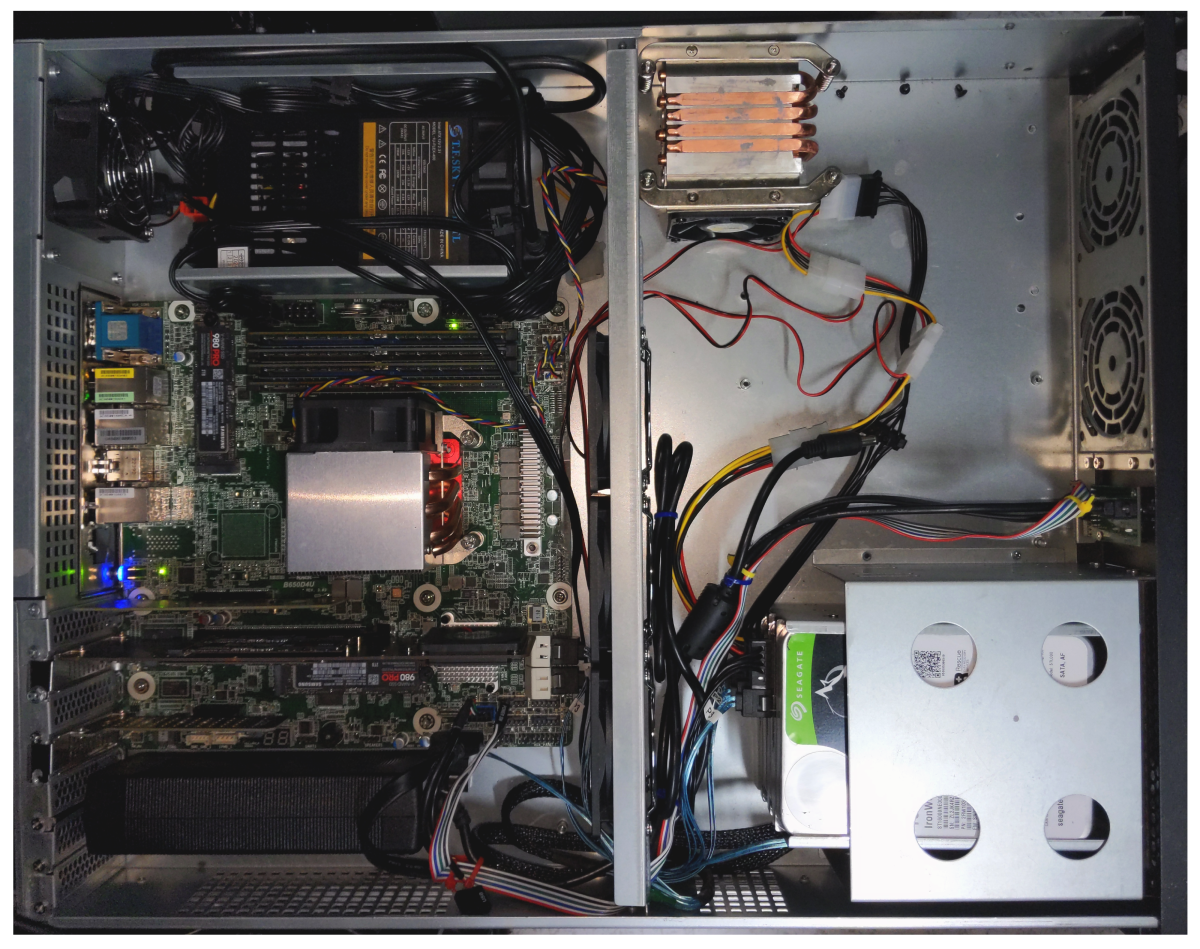

BKK04 - Bootnode/RPC

CPU

Model: AMD Ryzen 9 7950X R9 7950X CPU Processor 16-Core 32-Thread 5NM L3=64M Socket AM5

- Core Count: 16 cores

- Technology: 5NM process

- L3 Cache: 64MB

- PCI Express: 5.0

- Memory Support: DDR5

Capabilities: Designed to manage multiple tasks with ease, such as running multiple networks simultaneously, efficient blockchain sync, and low latency transactions.

CPU Cooler

- Model: COOLSERVER R64 AM5 Server CPU Cooler

- Design: 4 high-quality heatpipes, 150W TDP, double ball bearing

- Enhancement: Thermalright aluminium alloy AM5 frame

Motherboard

Model: AsRock Rack B650D4U(LGA 1718)

Model: AsRock Rack B650D4U(LGA 1718)

- Form Factor: Micro-ATX

- Memory Support: DDR5 ECC UDIMM

- PCIe Slots: Full PCIe 5.0 support, M.2 slot, x16 slot, and x4 slot

- Storage Support: Up to 7 M.2 slots

Memory

Model: 4x Server Memory Module|MICRON|DDR5|32GB|UDIMM/ECC|4800MHz|CL 40|1.1V|MTC20C2085S1EC48BA1R

- Capacity: 4 modules of 32GB DDR5 each

- Technology: ECC for increased data integrity

- Performance: Low-latency

SSD Expansion Cards

- Model: NVMe SSD Expansion Card NVMe PCIe RAID Adapter 4 Ports NVME SSD To PCI-E 4.0 X16 Expansion Card

Storage

- Primary Storage: 6x 2TB Samsung SSD Pro 980

- Backup Storage: 3x 16TB disks in ZFS RAIDZ (32TB total)

Benchmarks

``+----------+----------------+-------------+-------------+-------------------+

| Category | Function | Score | Minimum | Result |

+===========================================================================+

| CPU | BLAKE2-256 | 1.65 GiBs | 783.27 MiBs | ✅ Pass (215.8 %) |

|----------+----------------+-------------+-------------+-------------------|

| CPU | SR25519-Verify | 832.82 KiBs | 560.67 KiBs | ✅ Pass (148.5 %) |

|----------+----------------+-------------+-------------+-------------------|

| Memory | Copy | 16.99 GiBs | 11.49 GiBs | ✅ Pass (147.9 %) |

|----------+----------------+-------------+-------------+-------------------|

| Disk | Seq Write | 2.09 GiBs | 950.00 MiBs | ✅ Pass (225.3 %) |

|----------+----------------+-------------+-------------+-------------------|

| Disk | Rnd Write | 885.35 MiBs | 420.00 MiBs | ✅ Pass (210.8 %) |

+----------+----------------+-------------+-------------+-------------------+

From 5 benchmarks in total, 5 passed and 0 failed (10% fault tolerance).

2023-08-03 00:49:00 The hardware meets the requirements

Read Latency Statistics in nanoseconds:

-------------------------

Minimum: 460 ns

Maximum: 535014 ns

Mean: 968.885148 ns

Standard Deviation: 280.737214 ns

99.99th Percentile Read Latency: 350 ns

-------------------------

Read IOPS: 906996.500117

The read latency meets the 2000 ns and lower QoS requirement

Chassis

- Model: TGC-24550 2U

- Design: 2U rackmount, efficient airflow design

Power Supply Unit

- Model: T.F.SKYWINDINTL 1U MINI Flex ATX Power Supply Unit 400W Modular PSU

- Capacity: 400W

- Features: Built-in cooling fan, overcurrent, overvoltage, and short-circuit protection

KVM

- Model: Asrock Rack BCM/IPMI for remote control

Summary

The BKK04 Bootnode leverages a combination of cutting-edge components to deliver high performance, reliability, and scalability. From the AMD Ryzen 9 7950X processor with 32 threads high core clock cycles to the efficient memory and robust storage solutions, every part of this server is designed to handle demanding server applications, particularly those related to blockchain processing. The use of advanced cooling and power supply units ensures long-term sustainability and stable operation. All components have been chosen as well to be most energy efficient solutions that market can currently provide.

BKK05: Pioneering RISC-V Debian Webhosting

Introduction

Welcome to the frontier of web hosting with our BKK05 server, a trailblazing platform powered by the RISC-V Debian. This server represents not just a shift in technology but an experimental leap into the future of open-source computing.

Technical Specifications

| Component | Specifications |

|---|---|

| Processor | StarFive JH7100 64bit SoC with RV64GC, up to 1.5GHz |

| Memory | LPDDR4, Configurable up to 8GB |

| Storage | 2TB Samsung 980 NVMe |

| Networking | Dual RJ45 Gigabit Ethernet |

| Expansion | M.2 M-Key for NVMe SSDs |

| USB Ports | 2x USB 2.0 + 2x USB 3.0 |

| Video Out | HDMI 2.0, supporting 4K resolution |

Performance

The BKK05 server is equipped with a VisionFive 2 SBC at its core, featuring a StarFive JH7110 SoC. With 8GB of LPDDR4 RAM and a 2TB Samsung 980 NVMe drive, it's designed to handle web hosting and experimental server tasks with ease. Its RISC-V architecture ensures an open and versatile computing experience.

RISC-V Debian: The New Era

RISC-V brings a breath of fresh air to the server landscape, offering an open ISA (Instruction Set Architecture) that fosters innovation. Debian's adoption of RISC-V for our BKK05 server underpins our commitment to pioneering technology and community-driven development.

Web Hosting Capabilities

BKK05 runs Debian 12 (Bookworm), optimized for the RISC-V architecture. The server's configuration, which includes a robust 2TB NVMe drive, is particularly suited for web hosting, offering rapid data retrieval and ample storage for web applications.

Experimental Projects

The open nature of RISC-V and Debian makes BKK05 the perfect candidate for experimental projects. Its platform is ideal for developers looking to explore the capabilities of RISC-V architecture and contribute to the growth of the ecosystem.

Conclusion

The BKK05 server is a testament to our commitment to embracing open and innovative technologies. By leveraging the power of RISC-V Debian, we provide a stable and forward-thinking web hosting service while also contributing to an exciting new chapter in computing.

BKK06 - High-Performance Storage/Compute Node

CPU

Model: AMD EPYC™ 7713

- Core Count: 64 cores

- Threads: 128

- Max. Boost Clock: Up to 3.675GHz

- Base Clock: 2.0GHz

- L3 Cache: 256MB

- TDP: 225W

Capabilities: Optimized for high-throughput storage operations and parallel processing workloads. The EPYC 7713 provides exceptional multi-threaded performance for distributed storage and compute tasks.

Motherboard

Model: Supermicro H11SSL-i

- Chipset: System on Chip

- Form Factor: E-ATX

- Memory Slots: 8 x DIMM slots supporting DDR4

- PCIe Slots: Multiple PCIe 3.0 slots

Memory

Model: 8x Micron 32GB ECC Registered DDR4 3200

- Total Capacity: 256GB

- Technology: ECC Registered for data integrity

- Speed: 3200MHz

Storage Configuration

Pool: bkk06pool

State: ONLINE (with errors requiring attention)

Configuration: 6 mirror vdevs (12x Samsung 990 PRO 4TB NVMe)

Status: 16 data errors detected during scrub

Recent: Removal of vdev 5 completed, 93.6M memory used for mappings

Networking

Primary Interfaces:

- 2x Intel E810-C 25G QSFP ports (100G capable)

- 2x Broadcom BCM5720 1G management ports

Anycast Configuration:

- Participates in 100G anycast routing via BGP

- Route reflector client with dedicated ASN

- Q-in-Q VLAN tagging for service isolation

- Bond interfaces: bond-bkk00 and bond-bkk20 for redundancy

Active Services (28 containers)

Primary RPC nodes for:

- Polkadot relay chain and system parachains

- Kusama relay chain and system parachains

- Multiple Polkadot parachains (Moonbeam, Acala, Hydration, Bifrost, etc.)

- Paseo testnet infrastructure

- Penumbra node

- HAProxy load balancer

Chassis and Power

- Chassis: 2U Supermicro server chassis

- PSU: Greatwall Dual PSU 2U 1+1 CRPS redundant 800W

BKK07 - High-Performance Storage/Compute Node

CPU

Model: AMD EPYC™ 9654

- Core Count: 96 cores

- Threads: 192

- Max. Boost Clock: Up to 3.7GHz

- Base Clock: 2.4GHz

- L3 Cache: 384MB

- TDP: 360W (Configurable 320-400W)

Capabilities: Cutting-edge Genoa generation processor delivering exceptional performance for high-concurrency storage operations and compute-intensive workloads.

Motherboard

Model: Supermicro H13SSL-N

- Chipset: System on Chip

- Form Factor: ATX

- Memory Slots: 12 x DIMM slots supporting DDR5

Memory

Model: 6x SuperMicro 64GB ECC Registered DDR5 4800

- Current Capacity: 384GB (6 modules)

- Technology: DDR5 ECC Registered

- Speed: 4800MHz

- Upgrade Path: Expandable to 12 modules for full bandwidth

Storage Configuration

Pool: bkk07pool

State: ONLINE

Configuration: 1x 12-disk RAIDZ2

- 12x Samsung 990 PRO 4TB NVMe

Redundancy: Can tolerate 2 simultaneous disk failures

Capacity: ~32TB usable (10 data + 2 parity)

Last scrub: 0 errors (July 13, 2025)

Networking

Primary Interfaces:

- 2x Intel E810-C 25G QSFP ports (100G capable)

- 2x Broadcom BCM5720 1G management ports

Anycast Configuration:

- Participates in 100G anycast routing via BGP

- Route reflector client with dedicated ASN

- VLAN configuration: 100p1.400 for backbone connectivity

- Dual bond interfaces for active-backup failover

Active Services (24 containers)

Secondary RPC nodes for:

- Polkadot relay chain and all system parachains

- Kusama relay chain and all system parachains

- Paseo testnet (relay and system chains)

- Penumbra node

- IBP infrastructure

- HAProxy load balancer

- Build and Docker services

Board Management Controller (BMC)

- BMC Model: Aspeed AST2600

- Capabilities: Full remote management with KVM over IP

Chassis and Power

- Chassis: Ultra Short 2U rackmount chassis

- PSU: Greatwall Dual PSU 2U 1+1 CRPS redundant 800W

BKK08 - High-Performance Storage/Compute Node

CPU

Model: AMD EPYC™ 7742

- Core Count: 64 cores

- Threads: 128

- Max. Boost Clock: Up to 3.4GHz

- Base Clock: 2.25GHz

- L3 Cache: 256MB

- TDP: 225W

Capabilities: Optimized for parallel processing and storage workloads, delivering excellent multi-threaded performance for distributed systems.

Motherboard

Model: Supermicro H11SSL-series

- Chipset: System on Chip

- Form Factor: E-ATX

- Memory Slots: 8 x DIMM slots supporting DDR4

Memory

Configuration: 8x 32GB DDR4 ECC Registered

- Total Capacity: 256GB

- Speed: 3200MHz

- Technology: ECC for data integrity

Storage Configuration

Pool: bkk08pool State: ONLINE Configuration: 2x 4-disk RAIDZ1 vdevs

8x Samsung 990 PRO 4TB NVMe total Redundancy: Can tolerate 1 disk failure per vdev Capacity: ~24TB usable (6 data + 2 parity) Last scrub: 0 errors (July 13, 2025)

Networking

Primary Interfaces:

- 2x Intel E810-C 25G QSFP ports (100G capable)

- 2x Broadcom BCM5720 1G management ports

Anycast Configuration:

- Participates in 100G anycast routing via BGP

- Route reflector client with dedicated ASN

- Redundant path configuration for high availability

- Q-in-Q VLAN tagging for traffic isolation

Active Services (24 containers)

Tertiary/specialized nodes for:

- Polkadot RPC and parachains (Moonbeam, Nexus, Acala, Kilt, Hydration, Bifrost, Ajuna, Polimec, Unique, XCavate, Mythos)

- Paseo validator and RPC nodes

- Penumbra node

- IBP infrastructure

- IX peering management

- HAProxy load balancer

- Build services and logging infrastructure

Board Management Controller (BMC)

- BMC Model: Aspeed AST2500

- Capabilities: Remote management with IPMI/KVM over IP

Chassis and Power

- Chassis: Ultra Short 2U rackmount chassis

- PSU: Greatwall Dual PSU 2U 1+1 CRPS redundant 800W

Network Architecture Overview

All three nodes (BKK06, BKK07, BKK08) participate in our advanced networking infrastructure:

Anycast Implementation

- 100G Backbone: High-speed interconnects between storage nodes

- BGP Routing: Each node operates as a route reflector client

- Dedicated ASN: Independent autonomous system number per edge node

- Service Isolation: Q-in-Q VLAN tagging for traffic segregation

Redundancy Features

- Dual Uplinks: Each node has redundant connections to core routers

- Active-Backup Bonds: Sub-second failover between interfaces

- Path Diversity: Multiple routing paths for fault tolerance

- VLAN Segmentation: Separate VLANs for management, storage, and service traffic

Performance Optimization

- Hardware Offload: Intel E810-C NICs provide advanced packet processing

- NUMA Awareness: Memory and network affinity for optimal performance

- Jumbo Frames: MTU 9000 enabled on storage network

- Flow Control: Configured for lossless Ethernet on storage paths

BKK09

BKK11

BKK12

BKK13

Software Infrastructure

Our infrastructure leverages several powerful technologies and platforms to provide a robust and efficient environment for our operations.

Debian

Our servers run on Debian, a highly stable and reliable Linux-based operating system. Debian provides a strong foundation for our operations, with its wide array of packages, excellent package management system, and strong community support. Its stability and robustness make it an excellent choice for our server environments.

Proxmox Virtual Environment

We utilize Proxmox, an open-source server virtualization management solution. Proxmox allows us to manage virtual machines, containers, storage, virtualized networks, and HA clustering from a single, integrated platform. This is crucial in ensuring we have maximum control and efficiency in managing our various server processes. We utilize linux 6.1 lts pve kernel.

LXC (Linux Containers)

We leverage LXC (Linux Containers) to run multiple isolated Linux systems (containers) on a single host. This containerization technology provides us with lightweight, secure, and performant alternatives to full machine virtualization.

ZFS

ZFS, the Zettabyte File System, is an advanced filesystem and logical volume manager. It was designed to overcome many of the major issues found in previous designs and is used for storing data in our Proxmox environment. It provides robust data protection, supporting high storage capacities and efficient data compression, and allows us to create snapshots and clones of our filesystem.

Ansible

We use Ansible for automation of our system configuration and management tasks. Ansible enables us to define and deploy consistent configurations across multiple servers, and automate routine maintenance tasks, thus increasing efficiency and reducing the risk of errors.

MikroTik RouterOS

Our network infrastructure relies on MikroTik RouterOS, a robust network operating system. This system offers a variety of features such as routing, firewall, bandwidth management, wireless access point, backhaul link, hotspot gateway, VPN server, and more. This helps us ensure secure, efficient, and reliable network operations.

All these technologies are intertwined, working together to support our operations. They are chosen not just for their individual capabilities, but also for their compatibility and interoperability, creating an integrated, efficient, and reliable software infrastructure.

Network Configuration Documentation

Overview

This document outlines the BGP routing configuration for our multi-homed network with connections to various internet exchanges and transit providers in Bangkok, Hong Kong, Singapore, and Europe. Currently 3x 10G fibers are used for physical uplinks.

Bandwidth

Network Topology Diagram

graph TD

BKK50((BKK50 Gateway Router<br>CCR2004-16G-2S+<br>ECMP with 10G connections))

BKK50 --> |10G| BKK20

BKK50 --> |10G| BKK10

subgraph BKK20[BKK20 Edge Router<br>CCR2216-1G-12XS-2XQ]

B20_AMSIX[AMSIX-LAG<br>10G Physical Port]

end

subgraph BKK10[BKK10 Edge Router<br>CCR2116-12G-4S+]

B10_AMSIX[AMSIX-LAG<br>10G Physical Port]

B10_BKNIX[BKNIX-LAG<br>10G Physical Port]

end

B20_AMSIX --> |VLAN 911<br>1G| AMS_IX_BKK[AMS-IX Bangkok]

B20_AMSIX --> |VLAN 3994<br>200M| AMS_IX_HK[AMS-IX Hong Kong]

B20_AMSIX ==> |VLAN 2520<br>500M<br>Active| IPTX_SG[IPTX Singapore]

B20_AMSIX -.-> |VLAN 2517<br>500M<br>Passive| IPTX_HK[IPTX Hong Kong]

B10_AMSIX ==> |VLAN 2519<br>500M<br>Active| IPTX_HK

B10_AMSIX -.-> |VLAN 2518<br>500M<br>Passive| IPTX_SG

B10_AMSIX --> |VLAN 3995<br>100M| AMS_IX_EU[AMS-IX Europe]

B10_BKNIX --> |10G| BKNIX[BKNIX]

AMS_IX_BKK --> INTERNET((Internet))

AMS_IX_HK --> INTERNET

AMS_IX_EU --> INTERNET

IPTX_SG --> INTERNET

IPTX_HK --> INTERNET

BKNIX --> INTERNET

classDef router fill:#1a5f7a,color:#ffffff,stroke:#333,stroke-width:2px;

classDef ix fill:#4d3e3e,color:#ffffff,stroke:#333,stroke-width:2px;

classDef internet fill:#0077be,color:#ffffff,stroke:#333,stroke-width:2px;

classDef active stroke:#00ff00,stroke-width:4px;

classDef passive stroke:#ff0000,stroke-dasharray: 5 5;

class BKK50,INTERNET internet;

class BKK20,BKK10 router;

class AMS_IX_BKK,AMS_IX_HK,AMS_IX_EU,IPTX_SG,IPTX_HK,BKNIX ix;

linkStyle default stroke:#ffffff,stroke-width:2px;

Routing Configuration

| Name | Speed | Path Prepend | MED | Local Pref | External Communities | Description | Router |

|---|---|---|---|---|---|---|---|

| BKNIX | 10G | 0 | 10 | 200 | 142108:1:764 | Local IX | BKK10 |

| AMS-IX Bangkok | 1G | 0 | 20 | 190 | 142108:1:764 | Local IX | BKK20 |

| IPTX Singapore-BKK10 | 500M | 1 | 50 | 170 | 142108:2:35 | Regional Transit | BKK10 |

| IPTX Singapore-BKK20 | 500M | 1 | 50 | 160 | 142108:2:35 | Regional Transit | BKK20 |

| AMS-IX Hong Kong | 200M | 2 | 100 | 150 | 142108:2:142 | Regional IX | BKK20 |

| IPTX HK-BKK20 | 500M | 2 | 100 | 130 | 142108:2:142 | Regional Transit | BKK20 |

| IPTX HK-BKK10 | 500M | 2 | 100 | 120 | 142108:2:142 | Regional Transit | BKK10 |

| AMS-IX EU | 100M | 3 | 200 | 100 | 142108:2:142 | Remote IX | BKK10 |

This way:

- We keep our internal communities for our own routing decisions

- We share geographic information allowing peers to optimize their routing

- Peers can make informed decisions about traffic paths to our network

Would this be a useful approach?

Traffic Engineering Principles

- Local Preference: Higher values indicate more preferred routes. Local routes are preferred over regional, which are preferred over remote routes.

- MED (Multi-Exit Discriminator): Lower values are preferred. Used to influence inbound traffic when other attributes are equal.

- AS Path Prepending: Increases AS path length to make a route less preferred. Used for coarse control of inbound traffic.

HGC Circuit Configuration for ROTKO NETWORKS OÜ

The following circuits terminate at STT Bangkok 1 Data Center, MMR 3A facility in Bangkok, Thailand. All circuits are delivered to ROTKO NETWORKS OÜ infrastructure.

Primary Transit Circuits

Circuit PP9094729 (OTT00003347)

- Service: 400M (Burst to 800M) Thailand IPTx FOB Hong Kong

- Delivery: Customer Headend #2 (PEH8001159)

- VLAN: 2519

- Status: Hot upgrade + renewal

Circuit PP9094730 (OTT00003348)

- Service: 400M (Burst to 800M) Thailand IPTx FOB Singapore

- Delivery: Customer Headend #1 (PEH8001158)

- VLAN: 2520

- Status: Hot upgrade + renewal

Backup Circuits

Circuit PP9094735 (OTT00003349)

- Service: 800M Leased Line Internet Service FOB Thailand (Backup)

- Delivery: Customer Headend #1 (PEH8001158)

- VLAN: 2517

- Status: Hot upgrade + renewal

Circuit PP9094736 (OTT00003350)

- Service: 800M Leased Line Internet Service FOB Thailand (Backup)

- Delivery: Customer Headend #2 (PEH8001159)

- VLAN: 2518

- Status: Hot upgrade + renewal

Configuration Validation

BKK00: HK-HGC-IPTx-vlan2519 (Primary HK - PEH8001159)

BKK00: SG-HGC-IPTx-backup-vlan2518 (Backup SG - PEH8001159)

BKK20: SG-HGC-IPTx-vlan2520 (Primary SG - PEH8001158)

BKK20: HK-HGC-IPTx-backup-vlan2517 (Backup HK - PEH8001158)

IBP Rank 6 Network Infrastructure Documentation

ROTKO NETWORKS OÜ (AS142108)

Executive Summary

ROTKO NETWORKS operates a highly redundant, carrier-grade network infrastructure in Bangkok, Thailand, designed to meet and exceed IBP Rank 6 requirements. Our infrastructure features full redundancy at every layer - from multiple 10G/100G transit connections to redundant route reflectors, hypervisors, and storage systems.

Network Architecture Overview

Core Routing Infrastructure

Our network utilizes a hierarchical BGP architecture with dedicated route reflectors providing redundancy and scalability:

Route Reflectors (Core Routers)

-

BKK00 (CCR2216-1G-12XS-2XQ) - Primary Route Reflector

- Router ID: 10.155.255.4

- 100G connections to edge routers

- Multiple 10G transit/IX connections

-

BKK20 (CCR2216-1G-12XS-2XQ) - Secondary Route Reflector

- Router ID: 10.155.255.2

- 100G connections to edge routers

- Diverse 10G transit/IX connections

Both route reflectors run iBGP with full mesh connectivity and serve as aggregation points for external BGP sessions.

Layer 2 Switching Infrastructure

Core Switches

- BKK30 (CRS504-4XQ) - 4x100G QSFP28 switch

- BKK40 (CRS504-4XQ) - 4x100G QSFP28 switch

- BKK10 (CCR2216-1G-12XS-4XQ) - 4xSFP+ 25G 12x 1G (fallback for 100G)

- BKK60 (CRS354-48G-4S+2Q+) - 48x1G + 4xSFP+ + 2xQSFP+ switch (reserved)

- BKK50 (CCR2004-16G-2S+) - Management network

These switches provide Q-in-Q VLAN tagging for service isolation and traffic segregation.

Multi-Layer Redundancy Architecture

1. Physical Layer Redundancy

Diverse Fiber Paths

- 3x Independent 10G fiber uplinks from different carriers

- Physically diverse cable routes to prevent simultaneous cuts

- Multiple cross-connects within the data center

- Dual power feeds to all critical equipment

Hardware Redundancy

- Dual PSU on all servers and network equipment

- Hot-swappable components (fans, PSUs, drives)

- N+1 cooling in server chassis

- Spare equipment pre-racked for rapid replacement

2. Network Layer Redundancy

Transit Diversity

Our multi-homed architecture eliminates single points of failure:

Primary Transit - HGC (AS9304)

Hong Kong Path:

├── Active: BKK00 → VLAN 2519 → 400M (burst 800M)

└── Backup: BKK20 → VLAN 2517 → 800M standby

Singapore Path:

├── Active: BKK20 → VLAN 2520 → 400M (burst 800M)

└── Backup: BKK00 → VLAN 2518 → 800M standby

Internet Exchange Diversity

Local (Thailand):

├── BKNIX: 10G direct peering (200+ networks)

└── AMS-IX Bangkok: 1G peering (100+ networks)

Regional:

├── AMS-IX Hong Kong: 200M connection

└── HGC IPTx: Dual paths to HK/SG

Global:

└── AMS-IX Europe: 100M connection

BGP Redundancy Features

- Dual Route Reflectors: Automatic failover with iBGP

- BFD Detection: 100ms failure detection

- Graceful Restart: Maintains forwarding during control plane restart

- Path Diversity: Multiple valid routes to every destination

- ECMP Load Balancing: Traffic distributed across equal-cost paths

3. Hypervisor Layer Redundancy

Proxmox Cluster Features

High Availability Configuration:

├── Live Migration: Zero-downtime VM movement

├── HA Manager: Automatic VM restart on node failure

├── Shared Storage: Distributed access to VM data

└── Fencing: Ensures failed nodes are isolated

Per-Hypervisor Network Redundancy

Each hypervisor (BKK06, BKK07, BKK08) implements:

Dual Uplink Configuration:

├── bond-bkk00 (Active Path)

│ ├── Primary: vlan1X7 @ 100G

│ └── Backup: vlan1X7 @ 100G (different switch)

└── bond-bkk20 (Standby Path)

├── Primary: vlan2X7 @ 100G

└── Backup: vlan2X7 @ 100G (different switch)

Failover Time: <100ms using active-backup bonding

4. Storage Layer Redundancy

ZFS Resilience by Node

BKK06 - Maximum Redundancy (Mirror)

Configuration: 6x mirror vdevs (2-way mirrors)

Fault Tolerance:

- Can lose 1 disk per mirror (up to 6 disks total)

- Instant read performance during rebuild

- 50% storage efficiency for maximum protection

Recovery: Hot spare activation < 1 minute

BKK07 - Balanced Redundancy (RAIDZ2)

Configuration: 12-disk RAIDZ2

Fault Tolerance:

- Can lose any 2 disks simultaneously

- Maintains full operation during rebuild

- 83% storage efficiency

Recovery: Distributed parity reconstruction

BKK08 - Performance Redundancy (RAIDZ1)

Configuration: 2x 4-disk RAIDZ1 vdevs

Fault Tolerance:

- Can lose 1 disk per vdev (2 disks total)

- Parallel vdev operation for performance

- 75% storage efficiency

Recovery: Per-vdev independent rebuild

5. Service Layer Redundancy

Container Distribution Strategy

Service Deployment:

├── Primary Instance: BKK06

├── Secondary Instance: BKK07

├── Tertiary/Specialized: BKK08

└── Load Balancing: HAProxy on each node

Failover Mechanism:

- Health checks every 2 seconds

- Automatic traffic rerouting

- Session persistence via cookies

- <5 second service failover

Anycast Services

Global Anycast: 160.22.180.180/32

├── Announced from all locations

├── BGP-based geographic routing

└── Automatic closest-node selection

Local Anycast: 160.22.181.81/32

├── Thailand-specific services

├── Lower latency for regional users

└── Fallback to global on failure

6. Failure Scenarios & Recovery

Single Component Failures (No Impact)

- 1 Transit Link Down: Traffic reroutes via alternate transit

- 1 Route Reflector Down: Secondary handles all traffic

- 1 Hypervisor Down: VMs migrate or run from replicas

- 1 Disk Failure: ZFS continues with degraded redundancy

- 1 PSU Failure: Secondary PSU maintains operation

Multiple Component Failures (Degraded but Operational)

- Both HK Links Down: Singapore paths maintain connectivity

- Entire Hypervisor Failure: Services run from remaining 2 nodes

- Multiple Disk Failures: ZFS tolerates per design limits

- Primary + Backup Network Path: Tertiary paths available

Disaster Recovery

- Complete DC Failure:

- Off-site backups available

- DNS failover to alternate regions

- Recovery Time Objective (RTO): 4 hours

- Recovery Point Objective (RPO): 1 hour

Redundancy Validation & Testing

Automated Testing

- BGP Session Monitoring: Real-time alerting on session drops

- Path Validation: Continuous reachability tests

- Storage Health: ZFS scrubs weekly, SMART monitoring

- Service Health: Prometheus + Grafana dashboards

Manual Testing Schedule

- Monthly: Controlled failover testing

- Quarterly: Full redundancy validation

- Annually: Disaster recovery drill

Compliance with IBP Rank 6 Requirements

✓ No Single Point of Failure

Every critical component has at least one backup:

- Dual route reflectors with automatic failover

- Multiple transit providers and exchange points

- Redundant power, cooling, and network paths

- Distributed storage with multi-disk fault tolerance

✓ Sub-Second Network Convergence

- BFD: 100ms detection + 200ms convergence = 300ms total

- Bond failover: <100ms for layer 2 switchover

- BGP: Graceful restart maintains forwarding plane

✓ Geographic & Provider Diversity

- Transit via Hong Kong and Singapore

- Peering in Bangkok, Hong Kong, and Amsterdam

- Multiple submarine cable systems

- Carrier-neutral facility

✓ Automated Recovery

- HA cluster manages VM availability

- BGP automatically selects best paths

- ZFS self-healing with checksum validation

- Container orchestration via systemd

This comprehensive redundancy architecture ensures 99.95% uptime SLA compliance and exceeds all IBP Rank 6 requirements for infrastructure resilience.

Firmware Updates

Samsung 980Pro NVMe

wget https://semiconductor.samsung.com/resources/software-resources/Samsung_SSD_980_PRO_5B2QGXA7.iso

apt-get -y install gzip unzip wget cpio

mkdir /mnt/iso

sudo mount -o loop ./Samsung_SSD_980_PRO_5B2QGXA7.iso /mnt/iso/

mkdir /tmp/fwupdate

cd /tmp/fwupdate

gzip -dc /mnt/iso/initrd | cpio -idv --no-absolute-filenames

cd root/fumagician/

sudo ./fumagician

This .iso is for 980 Pro, if you have different model replace ISO with link on https://semiconductor.samsung.com/consumer-storage/support/tools/

ASRock Rack Motherboard

This guide outlines the steps to update the firmware on your ASRock Rack motherboard. The update includes the BIOS, BMC (Baseboard Management Controller), and the networking firmware.

Before starting, download the following files:

BIOS Update

ASRock Rack provides a BIOS flash utility called ASRock Rack Instant Flash, embedded in the Flash ROM, to make the BIOS update process simple and straightforward.

-

Preparation: Format a USB flash drive with FAT32/16/12 file system and save the new BIOS file to your USB flash drive.

-

Access ASRock Rack Instant Flash: Restart the server and press the

<F6>key during the POST or the<F2>key to enter the BIOS setup menu. From there, access the ASRock Rack Instant Flash utility. -

Update BIOS: Follow the instructions provided by the utility to update the BIOS.

BMC Firmware Update

In order to keep your BMC firmware up-to-date and have the latest features and improvements, regular updates are recommended. This guide provides step-by-step instructions on how to update your BMC firmware.

-

Preparation: Download the correct BMC firmware update file from the ASRock Rack website. Ensure the firmware version is later than the one currently installed on your device. Save the firmware file on your local system.

-

Access BMC Maintenance Portal: Open your web browser and navigate to the BMC maintenance portal using the IP address of the BMC. Typically, the URL is https://[BMC IP Address]/#maintenance/firmware_update_wizard, for instance, https://192.168.33.114/#maintenance/firmware_update_wizard.

-

Login: Use your BMC username and password to log into the portal.

-

Firmware Update Section: Navigate to the firmware update section.

-

Upload Firmware Image: Click on "Select Firmware Image" and upload the firmware file you downloaded earlier. The firmware files typically end with

.ima. For instance,B650D4U_2L2T_4.01.00.ima. -

Preserve Configuration: If you want to preserve all the current configurations during the update, check the box "Preserve all Configuration". This will maintain all the settings irrespective of the individual items marked as preserve/overwrite in the table below.

-

Start Update: Click "Firmware Update". The system will validate the image and if successful, the update process will start. The progress will be shown on the screen.

-

Reboot: Once the update is completed, the system will reboot automatically.

WARNING: Please note that after entering the update mode, other web pages, widgets, and services will not work. All the open widgets will be automatically closed. If the update is cancelled in the middle of the process, the device will be reset only for BMC BOOT, and APP components of Firmware.

NOTE: The IP address used in this guide is an example. Replace it with the actual IP address of your BMC. Also, remember to use a reliable network connection during the update process to prevent any interruption.

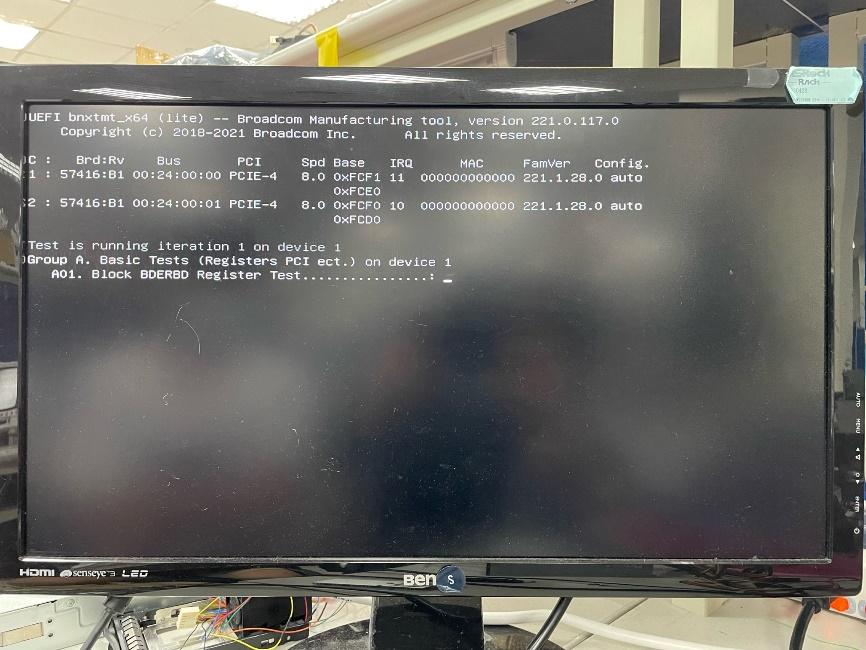

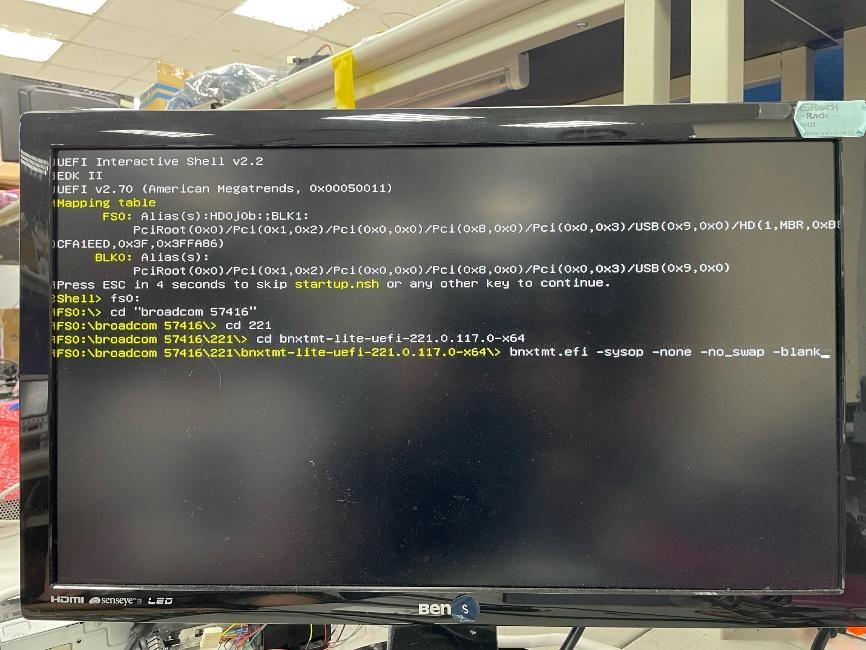

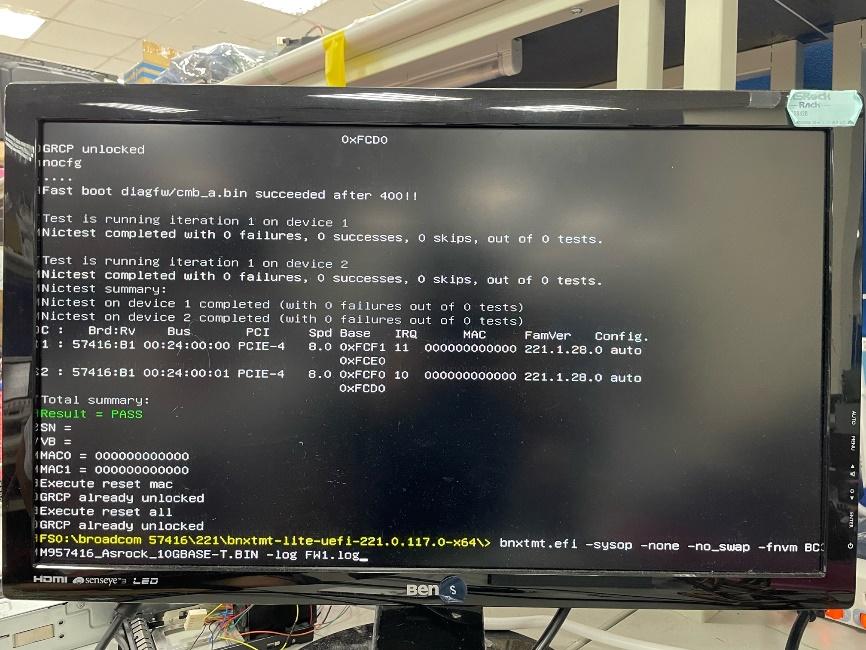

Networking Firmware Update

-

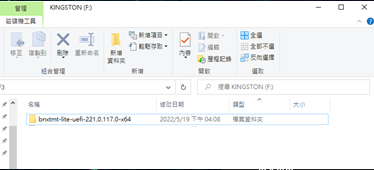

Preparation: Format a USB flash drive and copy the "bnxtmt-lite-uefi-221.0.117.0-x64" folder from the downloaded Broadcom 57416 LAN Flashing Firmware onto it.

-

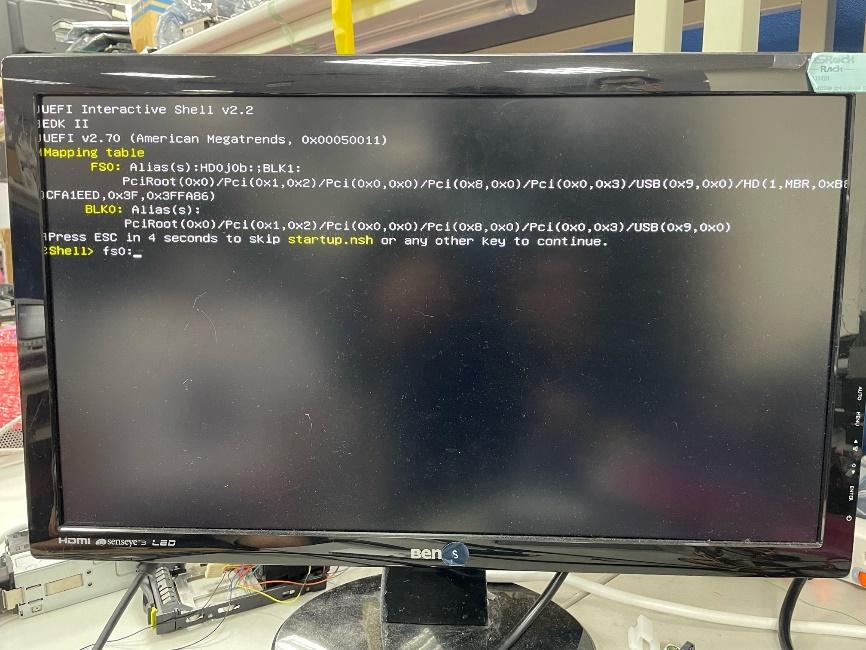

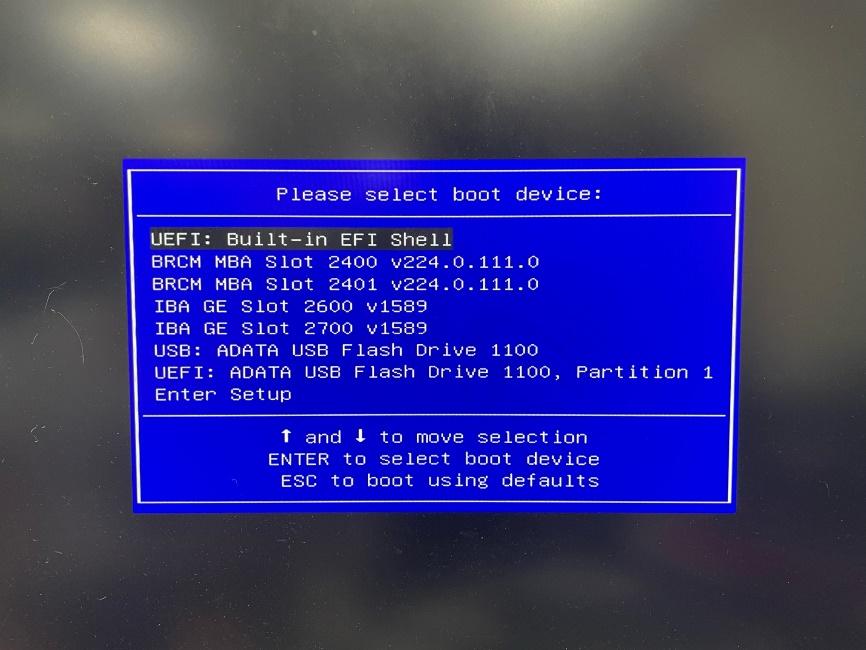

Access UEFI Shell: Insert the USB flash drive into your ASRock Rack server board, reboot the server, and hit F11 at the POST screen. Select "UEFI: Built-in EFI Shell". The USB flash drive should be named

FS0:. TypeFS0:and press enter.

-

Verify Current Firmware Version: Execute

bnxtmt.efito display the current firmware version.

-

Erase the Flash: Navigate to the "bnxtmt-lite-uefi-221.0.117.0-x64" folder and execute

bnxtmt.efi -sysop -none -no_swap –blankto erase the current firmware.

-

Flash the Firmware: Execute

bnxtmt.efi -sysop -none -no_swap -fnvm ASRR57416-2T.PKG -log FW1.logto flash the new firmware. Here,ASRR57416-2T.PKGis the firmware file.

-

Verify Updated Firmware Version: Run

bnxtmt.efiagain to verify the new firmware version.

-

Flash LAN Ports' MAC Addresses: Execute

bnxtmt.efi -none –m -log MAC1.log.

-

Input MAC Addresses: Enter the MAC addresses of both LAN ports when prompted. Write down these addresses beforehand.

-

Power Cycle: Turn off the system, power cycle the PSU, and then power everything back on.

Note: The MAC addresses for your LAN ports are crucial. Write them down before starting the update process as they need to be added during the command at step 8.

Proxmox Network Configuration Guide

Overview

Networking in Proxmox is managed through the Debian network interface

configuration file at /etc/network/interfaces. This guide will walk you

through the process of configuring the network interfaces and creating a Linux

bridge for your Proxmox server.

Pre-requisites:

Before we begin, you should have:

- A Proxmox VE installed and configured on your server.

- Administrative or root access to your Proxmox VE server.

Step 1: Understand Proxmox Network Configuration Files

Proxmox network settings are mainly configured in two files:

/etc/network/interfaces: This file describes the network interfaces available on your system and how to activate them. This file is critical for setting up bridged networking or configuring network interfaces manually./etc/hosts: This file contains IP address to hostname mappings.

Step 2: Configure Primary Network Interface

First, open the network interfaces configuration file for editing:

Set your primary network interface (e.g., enp9s0) to manual:

nano /etc/network/interfaces

auto enp9s0

iface enp9s0 inet manual

Step 3: Configure Linux Bridge

Next, create a Linux bridge (vmbr0):

auto vmbr0

iface vmbr0 inet static

address 192.168.69.103

netmask 255.255.255.0

gateway 192.168.69.1

bridge_ports enp9s0

bridge_stp off

bridge_fd 0

Make sure to replace the address, netmask, and gateway parameters with

the correct values for your network.

Step 4: Apply Configuration

Save and exit the file, then restart the network service for the changes to take effect:

systemctl restart networking.service

Step 5: Verify Configuration

Use the ip a command to verify that the bridge was created successfully:

ip a

Step 6: Configure the Hosts File

The /etc/hosts file maps network addresses to hostnames. Open this file in a

text editor:

nano /etc/hosts

Then, define the IP address and corresponding FQDN and hostname for your Proxmox server:

127.0.0.1 localhost

192.168.69.103 bkk03.yourdomain.com bkk03

# The following lines are desirable for IPv6 capable hosts

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

Remember to replace 192.168.69.103, bkk03.yourdomain.com, and bkk03 with

your server's IP address, FQDN, and hostname, respectively.

After updating the /etc/hosts file, save and exit the editor.

Important: Ensure the FQDN in your /etc/hosts matches the actual FQDN of

your server. This FQDN should be resolvable from the server itself and any

machines that will be accessing it. The Proxmox web interface uses this

hostname to generate SSL certificates for the HTTPS interface, so incorrect

resolution may lead to certificate issues.

By carefully following the instructions provided in this guide, administrators can ensure a robust and secure networking setup for their Proxmox servers. This guide should provide a good starting point for both new and experienced Proxmox administrators to understand and manage the network settings of their servers effectively.

Troubleshooting

If you run into issues during this process, you can use the following commands to troubleshoot:

-

systemctl status networking.service: Displays the status of the networking service. -

journalctl -xeu networking.service: Provides detailed logs for the networking service. -

ip addr flush dev <interface>andip route flush dev <interface>: Clears IP addresses and routes on a given interface. -

ip link delete <bridge>andip link add name <bridge> type bridge: Deletes and recreates a bridge. -

ip link set <interface> master <bridge>: Assigns an interface to a bridge. -

ip addr add <ip>/<subnet> dev <bridge>: Assigns an IP address to a bridge.

Remember to replace <interface>, <bridge>, <ip>, and <subnet> with the

appropriate values for your network.

For more detailed information about Proxmox networking, refer to the official Proxmox documentation.

Filesystem

Blockchain nodes, such as validators and archive nodes, require a highly reliable and efficient filesystem to operate effectively. The choice of filesystem can significantly affect the performance and reliability of these nodes. In light of performance concerns with ZFS, especially in ParityDB workloads as discussed in paritytech/polkadot-sdk/pull/1792, this guide provides a detailed approach to configuring a robust filesystem in Proxmox.

Filesystem Choices and Their Impact

The extensive use of I/O operations by blockchain nodes means the filesystem must manage write and read operations efficiently. CoW filesystems, while feature-rich and robust, introduce overhead that can degrade performance, as evidenced by the cited benchmarks.

Why Not ZFS or Btrfs for Blockchain Nodes?

- ZFS: While ZFS is revered for its data integrity, the added overhead from features like snapshotting, checksums, and the dynamic block size can significantly slow down write operations crucial for blockchain databases.

- Btrfs: Similar to ZFS, Btrfs offers advanced features such as snapshotting and volume management. However, its CoW nature means it can suffer from fragmentation and performance degradation over time, which is less than ideal for write-intensive blockchain operations.

Given these insights, a move towards a more traditional, performant, and linear filesystem is recommended.

Recommended Setup: LVM-thin with ext4

For high I/O workloads such as those handled by blockchain validators and archive nodes, LVM-thin provisioned with ext4 stands out:

- ext4: Offers a stable and linear write performance, which is critical for the high transaction throughput of blockchain applications.

- LVM-thin: Allows for flexible disk space allocation, providing the benefits of thin provisioning such as snapshotting and easier resizing without the CoW overhead.

Strategic Partitioning for Maximum Reliability and Performance

A well-thought-out partitioning scheme is crucial for maintaining data integrity and ensuring high availability.

RAID 1 Configuration for the Root Partition

Using a RAID 1 setup for the root partition provides mirroring of data across two disks, thus ensuring that the system can continue to operate even if one disk fails.

Implementing RAID 1:

-

Disk Preparation:

- Select two identical disks (e.g.,

/dev/sdaand/dev/sdb). - Partition both disks with an identical layout, reserving space for the root partition.

- Select two identical disks (e.g.,

-

RAID Array Creation:

- Execute the command to create the RAID 1 array:

mdadm --create --verbose /dev/md0 --level=1 --raid-devices=2 /dev/sda1 /dev/sdb1 - Format the RAID array with a resilient filesystem like ext4:

mkfs.ext4 /dev/md0 - Mount the RAID array at the root mount point during the Proxmox installation or manually afterward.

- Execute the command to create the RAID 1 array:

Boot Partition Configuration

Having two separate boot partitions provides redundancy, ensuring the system remains bootable in the event of a primary boot partition failure.

Configuring Boot Partitions:

-

Primary Boot Partition:

- On the first disk, create a boot partition (e.g.,

/dev/sda2). - Install the bootloader and kernel images here.

- On the first disk, create a boot partition (e.g.,

-

Fallback Boot Partition:

- Mirror the primary boot partition to a second disk (e.g.,

/dev/sdb2). - Configure the bootloader to fall back to this partition if the primary boot fails.

- Mirror the primary boot partition to a second disk (e.g.,

LVM-Thin Provisioning on Data Disks

LVM-thin provisioning is recommended for managing data disks. It allows for efficient disk space utilization by provisioning "thin" volumes that can be expanded dynamically as needed.

Steps for LVM-Thin Provisioning:

-

Initialize LVM Physical Volumes:

- Use the

pvcreatecommand on the designated data disks:pvcreate /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1

- Use the

-

Create a Volume Group:

- Group the initialized disks into a volume group:

vgcreate vg_data /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1

- Group the initialized disks into a volume group:

-

Establish a Thin Pool:

- Create a thin pool within the volume group to hold the thin volumes:

lvcreate --size 100G --thinpool data_tpool vg_data

- Create a thin pool within the volume group to hold the thin volumes:

-

Provision Thin Volumes:

- Create thin volumes from the pool as needed for containers or virtual machines: ```bash

lvcreate --virtualsize 500G --thin data_tpool --name data_volume

- Create thin volumes from the pool as needed for containers or virtual machines: ```bash

lvcreate --virtualsize 500G --thin data_tpool --name data_volume

-

Format and Mount Thin Volumes:

- Format the volumes with a filesystem, such as ext4, and mount them:

mkfs.ext4 /dev/vg_data/data_volume mount /dev/vg_data/data_volume /mnt/data_volume

- Format the volumes with a filesystem, such as ext4, and mount them:

Integrating LVM-Thin Volumes with Proxmox

Proxmox's pct command-line tool can manage container storage by mapping LVM-thin volumes to container mount points.

Unlabored

Effortless Proxmox Infrastructure is deployed using comprehensive collection of rolebooks use to deploy complete infrastructure as a code can be found now in Github.

Minimizing Our Carbon Footprint

In the relentless march of blockchain technology, we've chosen a path less trodden. Our commitment isn't just to innovation, but to the delicate balance between progress and planetary health.

Polkadot

We've anchored our on-chain operations to the Polkadot network. It's not merely a blockchain; it's a testament to what's possible when brilliant minds converge on the problem of sustainable computation. Polkadot doesn't just process transactions—it redefines the very notion of blockchain efficiency.

The Migration to Conscious Computing

Our servers have found a new home in STT's cutting-edge data center. It's more than a facility; it's a bold statement against the status quo of energy-hungry tech. By 2030, it aims to erase its carbon footprint entirely. Today, it already neutralizes 100% of its emissions—a rare beacon of responsibility in our industry.

The Numbers Don't Lie

Our current rack sip a mere 595.1 kWh monthly. In Thailand's energy landscape, that translates to 335 kg of CO2 equivalent. It's a number we're not satisfied with, but one we're actively working to drive down to zero.

The Road Ahead

Our journey with STT and Polkadot isn't just about ticking boxes or greenwashing our image. It's a fundamental shift in how we view the relationship between technology and our planet. We're not waiting for regulations or market pressures—we're driving change from within.

Team

Tommi, the founder of Rotko Network, represents a generation that mastered the QWERTY keyboard before perfecting handwriting. With over two decades of experience in building hardware, software, and managing servers, Tommi's journey through the digital landscape is as old as the commercial internet itself.

His adventure with Bitcoin began in 2008, running the first version on a Pentium D950. However, it wasn't until 2013, when Snowden's revelations confirmed his most paranoid thoughts about mass surveillance, that Tommi fully grasped the importance of decentralized systems. He realized that the internet, once a user-driven landscape, had fallen under the control of a handful of corporations, compromising user privacy and freedom.

This eye-opening realization led to the creation of Rotko Network. Tommi's mission is to reshape the internet into a space truly owned by its users, where privacy is fundamental and centralized control is minimized. With the dedication of a seasoned software enthusiast and the heart of a digital freedom fighter, Tommi stands at the helm of this initiative, working to end data exploitation and build a user-centric, user-owned internet infrastructure.

Meet Dan, a bona fide wizard of programming who cut his teeth coding back in the 90s, drawing inspiration from the music demoscene. With more than two decades under his belt, he's a seasoned veteran who understands the ins and outs of the game.

Just like many of us old-timers, he has a deep appreciation for functional programming and a penchant for clarity in code, with Rust being his go-to tool. He's got this knack for building software that runs as close to the metal as possible, extracting every bit of performance he can get.

One of his remarkable feats is constructing intricate drum machines entirely from scratch, a testament to his understanding of complex systems and algorithmic creativity. He's not just a coder; he's a craftsman.

Meet Al, our SEA timezone DevOps maestro, whose journey from pure mathematics to the world of backend development and deployment is as fascinating as a well-optimized algorithm. With a fresh master's degree in mathematics, Al's love affair with Linux was the plot twist that redirected his career path from abstract theorems to applied math in from of the code.

While his classmates were wrestling with complex integrals, Al was falling head over heels for the elegance of Linux systems. His setup is a masterpiece of minimalism and functionality, reflecting the same precision he once applied to mathematical proofs. You might catch him explaining load balancing algorithms with the same enthusiasm he once reserved for discussing the Riemann hypothesis.

But it's in the realm of deployment where Al truly shines. He treats our infrastructure like a complex equation, constantly seeking the most elegant solution. His latest obsession? Exploring how NixOS can bring the immutability and reproducibility of mathematical constants to our systems. Al's unique background brings a fresh perspective to our team, proving that in the world of tech, a solid foundation in mathematical thinking is an invaluable variable in the equation of success.

Walt, our Americas timezone NOC virtuoso, is the digital equivalent of a Swiss Army knife - versatile, reliable, and always ready to tackle the unexpected. With a rich background in full-stack development and systems administration, Walt brings a unique perspective that bridges the often treacherous gap between application development and infrastructure management. His expertise is a tapestry woven from threads of Linux wizardry, Docker sorcery, and cloud platform mastery, creating a skillset that's as diverse as it is deep.

In the realm of automation, Walt is nothing short of a maestro. Armed with Python and Bash as his instruments, he orchestrates symphonies of scripts that turn complex, time-consuming tasks into elegant, efficient processes. But Walt's true superpower lies in his approach to problem-solving. Where others see insurmountable obstacles, Walt sees puzzles waiting to be solved, often conjuring creative solutions that leave the rest of the team wondering if he's secretly a tech wizard in disguise.

With over 40 years of diverse and significant technical experience, Mikko is the ideal advisor for creating Internet and networking infrastructure at Rotko Networks. His expertise spans across all technical layers, from layer 1 hardware programming to layer 7 application interfaces, making him an essential asset, especially considering the CEO's top-to-bottom learning path.

His technical journey began in the mid-1980s at Nokia Mobile Phones, where he hand-wrote UI with NEC's Assembly without a compiler, demonstrating a profound understanding of low-level programming. His most notable achievement at Nokia was the invention of the menu buttons on the display, a pioneering feature that has become ubiquitous in mobile user interfaces.

One of the most noteworthy roles was serving as the IT Manager at the University of Turku, where he was responsible for managing and upgrading the entire IT infrastructure, including modern Data Center and network services. He implemented crucial projects like Datacenter upgrades, WLAN enhancements, network topology redesigns, and developed vital services such as private cloud storage and learning platforms.

His profound knowledge of technologies like Novell NetWare, AD, MS Exchange, backup and storage systems, IIS, ISA Firewall, DNS, and DHCP, coupled with his broad understanding of both low-level and high-level systems, makes him a tremendous asset for Rotko Networks. His broad and deep technical expertise ensures he will provide significant guidance in building a robust and efficient Internet and networking infrastructure.

Resources

Monitoring

- speedtest-go - A simple command line interface for testing internet bandwidth globally

- vaping - A vaping monitoring framework written in Python

- smokeping - Latency Monitor & Packet Loss Tracker

- bird - BIRD Internet Routing Daemon

- Atlas Probe - RIPE Atlas Software Probe

- gatus - Service health dashboard

Alerts

Web Tools

Post Mortems

Why We Write Postmortems

At Rotko Network, we believe in radical transparency. While it's common in our industry to see providers minimize their technical issues or deflect blame onto others, we choose a different path. Every failure is an opportunity to learn and improve - not just for us, but for the broader network engineering community.

We've observed a concerning trend where major providers often:

- Minimize the scope of incidents

- Provide vague technical details

- Deflect responsibility to third parties

- Hide valuable learning opportunities

A prime example of this behavior can be seen in the October 2024 OVHcloud incident, where their initial response blamed a "peering partner" without acknowledging the underlying architectural(basic filtering) vulnerabilities that allowed the route leak to cause such significant impact.

In contrast, our postmortems:

- Provide detailed technical analysis

- Acknowledge our mistakes openly

- Share our learnings

- Document both immediate fixes and longer-term improvements

- Include specific timeline data for accountability

- Reference relevant RFCs and technical standards

Directory

2024

- 2024-12-19: Edge Router Configuration Incident

- Impact: 95-minute connectivity loss affecting AMSIX, BKNIX, and HGC Hong Kong IPTx

- Root Cause: Misconceptions about router-id uniqueness requirements and OSPF behavior

- Status: Partial resolution, follow-up planned for 2025

2025

- 2025-3-6: Router Misconfiguration Incident

- Impact: 18-minute network outage affecting lower era points for validator and RPC nodes downtime

- Root Cause: Misconfiguration between a router and switch.

- Status: Fully recover

Network Outage Postmortem (2024-12-19/20)

Summary

A planned intervention to standardize router-id configurations across our edge routing infrastructure resulted in an unexpected connectivity loss affecting our AMSIX Amsterdam, BKNIX, and HGC Hong Kong IPTx peering sessions. The incident lasted approximately 95 minutes (23:55 UTC to 01:30 UTC) and impacted our validator performance on both Kusama and Polkadot networks. Specifically, this resulted in missed votes during Kusama Session 44,359 at Era 7,496 and Polkadot Session 10,010 at Era 1,662 with a 0.624 MVR (missed vote ratio).

Technical Details

The root cause was traced to an attempt to resolve a pre-existing routing anomaly where our edge routers were operating with multiple router-ids across different uplink connections and iBGP sessions. The heterogeneous router-id configuration had been causing nexthop resolution failures and inability to transit in our BGP infrastructure.

The original misconfiguration stemmed from an incorrect assumption that router-ids needed to be publicly unique at Internet exchange points. This is not the case - router-ids only need to be unique within our Interior Gateway Protocol (IGP) domain. This misunderstanding led to the implementation of multiple router-ids in loopback interfaces, creating unnecessary complexity in our routing infrastructure.

During the remediation attempt to standardize OSPF router-ids to a uniform value across the infrastructure, we encountered an unexpected failure mode that propagated through our second edge router, resulting in a total loss of connectivity regardless of router&&uplink redundancy. The exact mechanism of the secondary failure remains under investigation - the cascade effect that caused our redundant edge router to lose connectivity suggests an underlying architectural vulnerability in our BGP session management.

Response Timeline

- 23:55 UTC: Initiated planned router-id standardization

- ~23:56 UTC: Primary connectivity loss detected

- ~23:56 UTC: Secondary edge router unexpectedly lost connectivity

- 01:30 UTC: Full service restored via configuration rollback

Mitigation

Recovery was achieved through an onsite restoration of backed-up router configurations. While this approach was successful, the 95-minute resolution time indicates a need for more robust rollback procedures and most of all precaution during network configuration.

Impact

- Kusama validator session 44,359 experienced degraded performance with MVR 1 in Era 7,496 and missed votes in Era 7,495

- Polkadot validator session 10,010 experienced degraded performance with 0.624 MVR in Era 1,662

- Temporary loss of peering sessions with AMSIX, BKNIX, and HGC Hong Kong IPTx

Current Status and Future Plans

The underlying routing issue (multiple router-ids in loopback) remains unresolved. Due to the maintenance freeze in internet exchanges during the holiday period, the resolution has been postponed until next year. To ensure higher redundancy during the next maintenance window, we plan to install a third edge router before attempting the configuration standardization again.

Future Work

- Implementation of automated configuration validation testing

- Enforce usage of Safe Mode during remote maintenance to prevent cascading failures

- Investigation into BGP session interdependencies between edge routers

- Read RFC 2328 to understand actual protocol and how vendor implementation differ

- Installation and configuration of third edge router to provide N+2 redundancy during upcoming maintenance